GBase 8a数据库集群,支持从现有集群缩容操作,可以缩容多个数据节点,也可以缩容多个管理节点,当然也可以同时缩容。其中数据节点缩容要创建新的分布策略,将数据重分布到新的distribution上。本文是一个详细操作步骤。

关于缩容相反的扩容的操作,请参考 GBase 8a 扩容操作详细实例

目录导航

环境

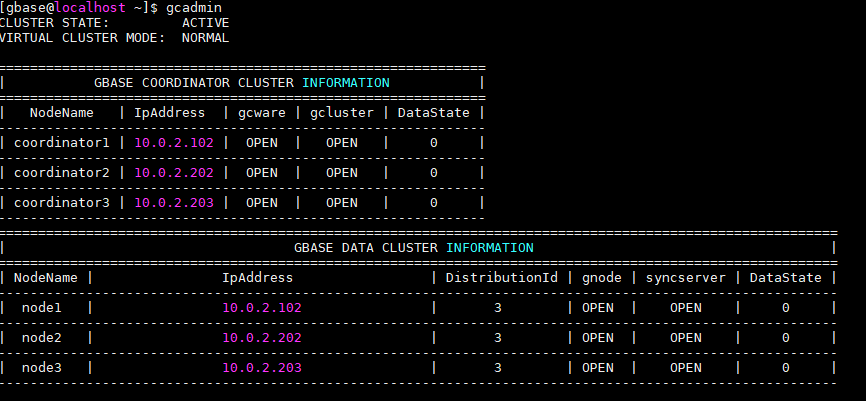

操作环境是一套V95版本的集群,共3个对等节点。本次缩容目标是将203节点从集群服务里移除。

如下是集群当前状态和数据分布策略。

[gbase@localhost ~]$ gcadmin

CLUSTER STATE: ACTIVE

VIRTUAL CLUSTER MODE: NORMAL

=============================================================

| GBASE COORDINATOR CLUSTER INFORMATION |

=============================================================

| NodeName | IpAddress | gcware | gcluster | DataState |

-------------------------------------------------------------

| coordinator1 | 10.0.2.102 | OPEN | OPEN | 0 |

-------------------------------------------------------------

| coordinator2 | 10.0.2.202 | OPEN | OPEN | 0 |

-------------------------------------------------------------

| coordinator3 | 10.0.2.203 | OPEN | OPEN | 0 |

-------------------------------------------------------------

=========================================================================================================

| GBASE DATA CLUSTER INFORMATION |

=========================================================================================================

| NodeName | IpAddress | DistributionId | gnode | syncserver | DataState |

---------------------------------------------------------------------------------------------------------

| node1 | 10.0.2.102 | 3 | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

| node2 | 10.0.2.202 | 3 | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

| node3 | 10.0.2.203 | 3 | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

编写新的分布策略配置文件

因为要缩容数据服务,所以先编写新的分布策略,不要包含要缩容的数据节点IP。本例中保留了102和202节点。

[gbase@localhost gcinstall]$ cp gcChangeInfo.xml gcChangeInfo_2node.xml

[gbase@localhost gcinstall]$ vi gcChangeInfo_2node.xml

[gbase@localhost gcinstall]$ cat gcChangeInfo_2node.xml

<?xml version="1.0" encoding="utf-8"?>

<servers>

<rack>

<node ip="10.0.2.102"/>

<node ip="10.0.2.202"/>

</rack>

</servers>

[gbase@localhost gcinstall]$

开始创建新的分布策略

原有的分布策略编号ditribution ID是3,新的是5。由于这个集群已经做过多次测试操作,ID不是连续的。一般现场首次操作都是从1开始,后面的ID是连续的。

新的分布策略里,102和202两个节点是互备的。

[gbase@localhost gcinstall]$ gcadmin distribution gcChangeInfo_2node.xml p 1 d 1

gcadmin generate distribution ...

gcadmin generate distribution successful

[gbase@localhost gcinstall]$ gcadmin showdistribution

Distribution ID: 5 | State: new | Total segment num: 2

Primary Segment Node IP Segment ID Duplicate Segment node IP

========================================================================================================================

| 10.0.2.102 | 1 | 10.0.2.202 |

------------------------------------------------------------------------------------------------------------------------

| 10.0.2.202 | 2 | 10.0.2.102 |

========================================================================================================================

Distribution ID: 3 | State: old | Total segment num: 3

Primary Segment Node IP Segment ID Duplicate Segment node IP

========================================================================================================================

| 10.0.2.102 | 1 | 10.0.2.202 |

------------------------------------------------------------------------------------------------------------------------

| 10.0.2.202 | 2 | 10.0.2.203 |

------------------------------------------------------------------------------------------------------------------------

| 10.0.2.203 | 3 | 10.0.2.102 |

========================================================================================================================

[gbase@localhost gcinstall]$

初始化并将数据重分布

重分布开始后,观察gclusterdb.rebalancing_status表,直到所有的表都完成重分布。

[gbase@localhost gcinstall]$ gccli

GBase client 9.5.2.17.115980. Copyright (c) 2004-2020, GBase. All Rights Reserved.

gbase> initnodedatamap;

Query OK, 0 rows affected, 6 warnings (Elapsed: 00:00:01.40)

gbase> rebalance instance;

Query OK, 21 rows affected (Elapsed: 00:00:01.18)

gbase> use gclusterdb;

Query OK, 0 rows affected (Elapsed: 00:00:00.01)

gbase> show tables;

+-------------------------+

| Tables_in_gclusterdb |

+-------------------------+

| audit_log_express |

| dual |

| import_audit_log_errors |

| rebalancing_status |

| sys_sqls |

| sys_sqls_elapsepernode |

+-------------------------+

6 rows in set (Elapsed: 00:00:00.00)

gbase> desc rebalancing_status;

+-----------------+--------------+------+-----+---------+-------+

| Field | Type | Null | Key | Default | Extra |

+-----------------+--------------+------+-----+---------+-------+

| index_name | varchar(129) | YES | | NULL | |

| db_name | varchar(64) | YES | | NULL | |

| table_name | varchar(64) | YES | | NULL | |

| tmptable | varchar(129) | YES | | NULL | |

| start_time | datetime | YES | | NULL | |

| end_time | datetime | YES | | NULL | |

| status | varchar(32) | YES | | NULL | |

| percentage | int(11) | YES | | NULL | |

| priority | int(11) | YES | | NULL | |

| host | varchar(32) | YES | | NULL | |

| distribution_id | bigint(8) | YES | | NULL | |

+-----------------+--------------+------+-----+---------+-------+

11 rows in set (Elapsed: 00:00:00.00)

gbase> select status,count(*) from gclusterdb.rebalancing_status group by status;

+-----------+----------+

| status | count(*) |

+-----------+----------+

| COMPLETED | 21 |

+-----------+----------+

1 row in set (Elapsed: 00:00:00.15)

检查是否有残留

检查gbase.table_distribution表,看是否有老的ID为3的表残留。如果有,则手工执行一个rebalance table 库名.表名 ,并等重分布完成。

gbase> desc gbase.table_distribution;

+----------------------+---------------+------+-----+---------+-------+

| Field | Type | Null | Key | Default | Extra |

+----------------------+---------------+------+-----+---------+-------+

| index_name | varchar(128) | NO | PRI | NULL | |

| dbName | varchar(64) | NO | | NULL | |

| tbName | varchar(64) | NO | | NULL | |

| isReplicate | varchar(3) | NO | | YES | |

| hash_column | varchar(4096) | YES | | NULL | |

| lmt_storage_size | bigint(20) | YES | | NULL | |

| table_storage_size | bigint(20) | YES | | NULL | |

| is_nocopies | varchar(3) | NO | | YES | |

| data_distribution_id | bigint(8) | NO | | NULL | |

| vc_id | varchar(64) | NO | PRI | NULL | |

| mirror_vc_id | varchar(64) | YES | | NULL | |

+----------------------+---------------+------+-----+---------+-------+

11 rows in set (Elapsed: 00:00:00.00)

gbase> select count(*) from gbase.table_distribution where data_distribution_id=3;

+----------+

| count(*) |

+----------+

| 0 |

+----------+

1 row in set (Elapsed: 00:00:00.00)

删掉老的数据nodedatamap

gbase> refreshnodedatamap drop 3;

Query OK, 0 rows affected, 6 warnings (Elapsed: 00:00:01.61)

删掉老的分布策略

[gbase@localhost gcinstall]$ gcadmin rmdistribution 3

cluster distribution ID [3]

it will be removed now

please ensure this is ok, input [Y,y] or [N,n]: y

gcadmin remove distribution [3] success

[gbase@localhost gcinstall]$ gcadmin showdistribution

Distribution ID: 5 | State: new | Total segment num: 2

Primary Segment Node IP Segment ID Duplicate Segment node IP

========================================================================================================================

| 10.0.2.102 | 1 | 10.0.2.202 |

------------------------------------------------------------------------------------------------------------------------

| 10.0.2.202 | 2 | 10.0.2.102 |

========================================================================================================================

[gbase@localhost gcinstall]$ gcadmin

CLUSTER STATE: ACTIVE

VIRTUAL CLUSTER MODE: NORMAL

=============================================================

| GBASE COORDINATOR CLUSTER INFORMATION |

=============================================================

| NodeName | IpAddress | gcware | gcluster | DataState |

-------------------------------------------------------------

| coordinator1 | 10.0.2.102 | OPEN | OPEN | 0 |

-------------------------------------------------------------

| coordinator2 | 10.0.2.202 | OPEN | OPEN | 0 |

-------------------------------------------------------------

| coordinator3 | 10.0.2.203 | OPEN | OPEN | 0 |

-------------------------------------------------------------

=========================================================================================================

| GBASE DATA CLUSTER INFORMATION |

=========================================================================================================

| NodeName | IpAddress | DistributionId | gnode | syncserver | DataState |

---------------------------------------------------------------------------------------------------------

| node1 | 10.0.2.102 | 5 | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

| node2 | 10.0.2.202 | 5 | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

| node3 | 10.0.2.203 | | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

移除已经缩容的数据节点注册信息

注意其中只有被缩容的IP 203。

[gbase@localhost gcinstall]$ cat gcChangeInfo_delete203.xml

<?xml version="1.0" encoding="utf-8"?>

<servers>

<rack>

<node ip="10.0.2.203"/>

</rack>

</servers>

[gbase@localhost gcinstall]$

[gbase@localhost gcinstall]$ gcadmin rmnodes gcChangeInfo_delete203.xml

gcadmin remove nodes ...

gcadmin rmnodes from cluster success

[gbase@localhost gcinstall]$ gcadmin

CLUSTER STATE: ACTIVE

VIRTUAL CLUSTER MODE: NORMAL

=============================================================

| GBASE COORDINATOR CLUSTER INFORMATION |

=============================================================

| NodeName | IpAddress | gcware | gcluster | DataState |

-------------------------------------------------------------

| coordinator1 | 10.0.2.102 | OPEN | OPEN | 0 |

-------------------------------------------------------------

| coordinator2 | 10.0.2.202 | OPEN | OPEN | 0 |

-------------------------------------------------------------

| coordinator3 | 10.0.2.203 | CLOSE | CLOSE | 0 |

-------------------------------------------------------------

=========================================================================================================

| GBASE DATA CLUSTER INFORMATION |

=========================================================================================================

| NodeName | IpAddress | DistributionId | gnode | syncserver | DataState |

---------------------------------------------------------------------------------------------------------

| node1 | 10.0.2.102 | 5 | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

| node2 | 10.0.2.202 | 5 | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

停掉所有节点的服务

[gbase@localhost gcinstall]$ gcluster_services all stop

Stopping GCMonit success!

Stopping gcrecover : [ OK ]

Stopping gcluster : [ OK ]

Stopping gcware : [ OK ]

Stopping gbase : [ OK ]

Stopping syncserver : [ OK ]

[gbase@localhost gcinstall]$

[gbase@localhost gcinstall]$ ssh 10.0.2.202 "gcluster_services all stop"

gbase@10.0.2.203's password:

Stopping GCMonit success!

Stopping gcrecover : [ OK ]

Stopping gcluster : [ OK ]

Stopping gcware : [ OK ]

Stopping gbase : [ OK ]

Stopping syncserver : [ OK ]

[gbase@localhost gcinstall]$

[gbase@localhost gcinstall]$ ssh 10.0.2.203 "gcluster_services all stop"

gbase@10.0.2.203's password:

Stopping GCMonit success!

Stopping gcrecover : [ OK ]

Stopping gcluster : [ OK ]

Stopping gcware : [ OK ]

Stopping gbase : [ OK ]

Stopping syncserver : [ OK ]

[gbase@localhost gcinstall]$

卸载要缩容的管理节点和数据节点服务

进入安装包解压后的gcinstall目录,在demo.option的副本里,填写要缩容的管理和数据节点IP。对应的残留的IP填写下后面exist开头的参数里。

执行uninstall.py 卸载数据库服务

[gbase@localhost gcinstall]$ pwd

/home/gbase/gcinstall

[gbase@localhost gcinstall]$

[gbase@localhost gcinstall]$

[gbase@localhost gcinstall]$ cat demo_delete.options

installPrefix= /opt/gbase

coordinateHost = 10.0.2.203

coordinateHostNodeID = 203

dataHost = 10.0.2.203

existCoordinateHost =10.0.2.102,10.0.2.202

existDataHost =10.0.2.102,10.0.2.202

dbaUser = gbase

dbaGroup = gbase

dbaPwd = 'gbase1234'

rootPwd = '111111'

#rootPwdFile = rootPwd.json

genDBPwd=''

[gbase@localhost gcinstall]$ ./unInstall.py --silent=demo_delete.options

These GCluster nodes will be uninstalled.

CoordinateHost:

10.0.2.203

DataHost:

10.0.2.203

Are you sure to uninstall GCluster ([Y,y]/[N,n])? y

10.0.2.203 unInstall 10.0.2.203 successfully.

Update all coordinator gcware conf.

10.0.2.202 update gcware conf successfully.

10.0.2.102 update gcware conf successfully.

[gbase@localhost gcinstall]$ gcadmin

[gcadmin] Could not initialize CRM instance error: [122]->[can not connect to any server]

[gbase@localhost gcinstall]$ gcluster_services all start

Starting gcware : [ OK ]

Starting gcluster : [ OK ]

Starting gcrecover : [ OK ]

Starting gbase : [ OK ]

Starting syncserver : [ OK ]

Starting GCMonit success!

[gbase@localhost gcinstall]$ ssh 10.0.2.203 "gcluster_services all start"

gbase@10.0.2.203's password:

bash: gcluster_services: command not found

[gbase@localhost gcinstall]$ ssh 10.0.2.202 "gcluster_services all start"

gbase@10.0.2.202's password:

Starting gcware : [ OK ]

Starting gcluster : [ OK ]

Starting gcrecover : [ OK ]

Starting gbase : [ OK ]

Starting syncserver : [ OK ]

Starting GCMonit success!

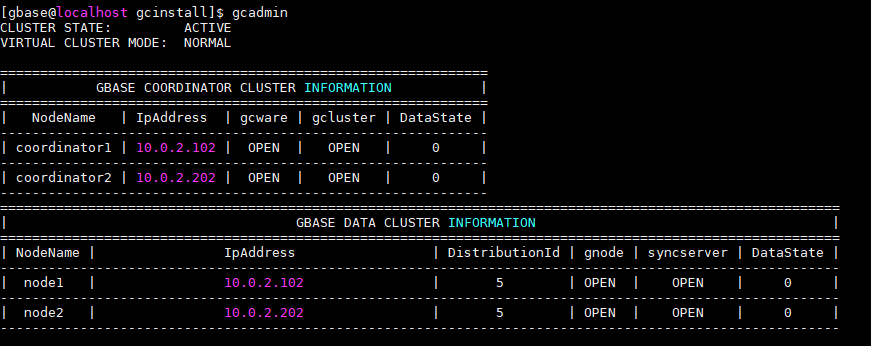

最终结果

最终达到了目的,缩容为2个节点的集群。

[gbase@localhost gcinstall]$ gcadmin

CLUSTER STATE: ACTIVE

VIRTUAL CLUSTER MODE: NORMAL

=============================================================

| GBASE COORDINATOR CLUSTER INFORMATION |

=============================================================

| NodeName | IpAddress | gcware | gcluster | DataState |

-------------------------------------------------------------

| coordinator1 | 10.0.2.102 | OPEN | OPEN | 0 |

-------------------------------------------------------------

| coordinator2 | 10.0.2.202 | OPEN | OPEN | 0 |

-------------------------------------------------------------

=========================================================================================================

| GBASE DATA CLUSTER INFORMATION |

=========================================================================================================

| NodeName | IpAddress | DistributionId | gnode | syncserver | DataState |

---------------------------------------------------------------------------------------------------------

| node1 | 10.0.2.102 | 5 | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

| node2 | 10.0.2.202 | 5 | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

总结

缩容,首先要保证数据的安全,所以要先将数据重分布到新的分布策略上,并保证重分布完成。 其次是将老的集群内注册的信息删除, 最后将缩容服务器上的程序删除。

如果支持只缩容管理节点,因为不涉及到数据,所以直接到卸载管理节点服务这一步就可,当然各个节点的服务还是要停下来的。

如果只缩容数据节点,则只是在最后一步的卸载时,只填写dataHost即可。

内容很详细。